The EHS Research Group

The EHS team is involved in a range of research projects, exploring whether vibration can be used to enhance speech, music perception and spatial hearing for hearing-impaired listeners (including hearing aid and cochlear implant users). This includes both work exploring the limits of the human tactile and auditory systems, work with clinical populations, and the development of new haptic and auditory assistive-technologies.

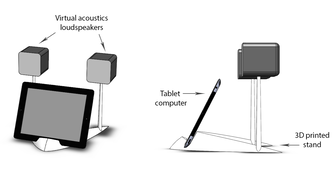

The team is also working on research and consultancy projects exploring whether haptic devices can be used to enhance balance perception and gunshot localisation, as well as a project focused on realistic sound reproduction using virtual acoustics (e.g., cross-talk cancellation) and enhancing gaming and virtual reality.

Research projects:

Hearing with Haptics

Balancing with Haptics

Virtual acoustics

Consultancy and collaboration:

Human testing for hearing, vibration, and balance (including with clinical populations)

Vibration and acoustic system calibration and testing

AI for noise reduction, system optimization, and big data

App development for hearing and acoustics

For more information about working with the EHS team, please contact Dr Mark Fletcher at: [email protected]

You can also follow us on Twitter at: https://twitter.com/ElectroHaptics

The team is also working on research and consultancy projects exploring whether haptic devices can be used to enhance balance perception and gunshot localisation, as well as a project focused on realistic sound reproduction using virtual acoustics (e.g., cross-talk cancellation) and enhancing gaming and virtual reality.

Research projects:

Hearing with Haptics

Balancing with Haptics

Virtual acoustics

Consultancy and collaboration:

Human testing for hearing, vibration, and balance (including with clinical populations)

Vibration and acoustic system calibration and testing

AI for noise reduction, system optimization, and big data

App development for hearing and acoustics

For more information about working with the EHS team, please contact Dr Mark Fletcher at: [email protected]

You can also follow us on Twitter at: https://twitter.com/ElectroHaptics

Peer-reviewed publications

2024

|

Our third paper of 2024, presenting our work maximizing the speech information we present through haptics, published in Nature Scientific Reports

|

|

Our second paper of 2024, presenting our neural-network-based noise-reduction approach for haptic hearing aids, published in Nature Scientific Reports

|

|

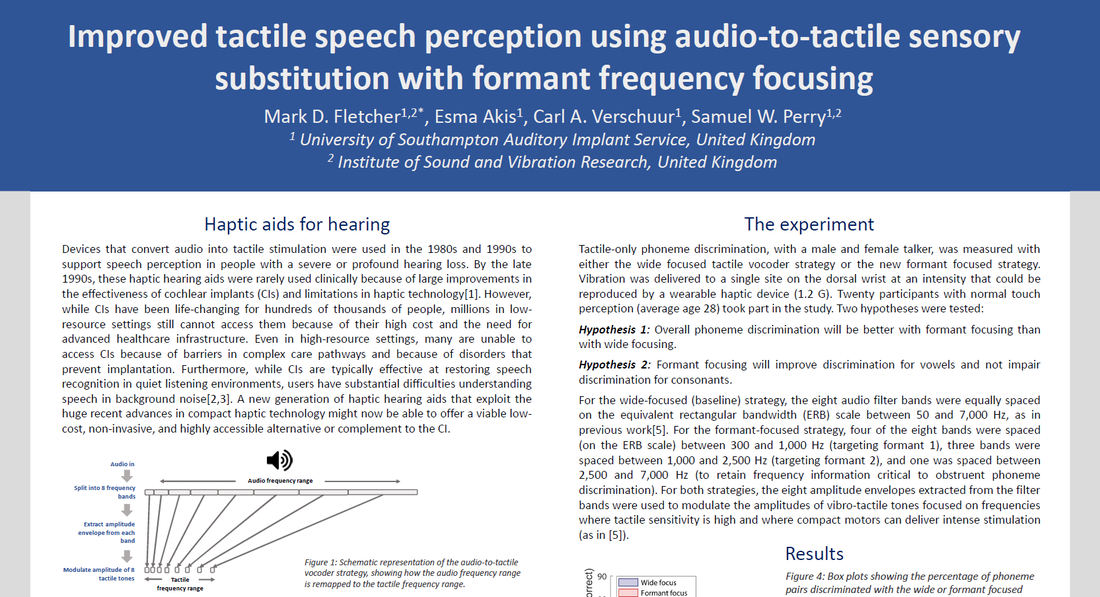

Our first paper of 2024, presenting our new approach for converting audio to haptics, published in Nature Scientific Reports

|

2023

|

Our 2023 paper, published in Nature Scientific Reports

|

|

Ahmed Bin Afif successfully defended PhD thesis

|

| ||||||

2022

|

Sam Perry's successfully defended PhD thesis

|

|

Our new paper on virtual acoustics for clinical audiology

|

2021

|

Mark's review paper on the use of haptics to enhance music perception in hearing impaired listeners, published in Frontiers in Neuroscience

|

|

Our review paper on the state of the emerging field of electro-haptics in audiology, published in Frontiers in Neuroscience

|

|

Our first paper published in Sensors

|

|

Our first paper of 2021, published in Nature Scientific Reports

|

2020

|

Our 2020 review paper on the challenges of developing a new haptic device, published in Expert Review of Medical Devices

|

|

Our forth paper of 2020, published in Nature Scientific Reports

|

|

Our third paper of 2020, published in Nature Scientific Reports

|

|

Our second paper of 2020, published in Nature Scientific Reports

|

|

Our first paper of 2020, published in Nature Scientific Reports

|

2019

|

Our 2019 paper in Nature Scientific Reports

|

2018

|

Our first EHS paper, published in Trends in Hearing

|

Presentations

2024

|

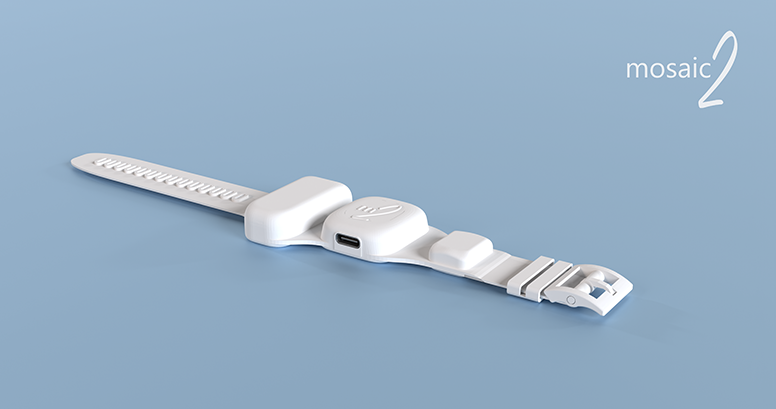

Mark's invited talk at the Sussex Hearing Lab Meeting, Brighton (UK), describing our work developing the mosaic 2 haptic aid for hearing

|

|

Mark's haptic hearing aids lecture

|

|

|

Mark and Sam present a poster and a demo of the new electro-haptic devices to the ARO in Los Angeles (USA)

|

2023

|

Sam's talk at the Basic Auditory Sciences Meeting, London (UK)

|

2022

|

Mark's invited talk at the Mathematics and Statistics department of the University of Exeter (UK)

|

|

Sam's invited talk at the UK Hearing and Audiology Sciences Meeting, Southampton (UK)

|

|

Mark's invited talk at the National Cochlear Implant Users Association, London (UK), giving an overview of our project on enhancing hearing in CI users using haptics

|

|

Mark's invited talk at the Sussex Hearing Lab Meeting, Brighton (UK), describing our work on enhancing speech perception and spatial hearing in hearing impaired listening using haptics

|

|

Mark's guest lecture on the Fundamentals of Auditory Implants module on the University of Southampton Audiology MSc course

|

|

Alberte's poster on using haptics to improve music perception in CI users, presented at the ACI Alliance CI2022 DC conference

|

2021

|

Mark's invited talk at the Music & CI Symposium, Cambridge University (UK), on enhancing music perception in CI users using haptics

|

|

|

Mark & Sam's invited talk at the Center for Music in the Brain at Aarhus University (Denmark), on enhancing cochlear implant listening using haptics

|

|

|

Sam's poster at the Music & CI Symposium, Cambridge University (UK), on enhancing pitch discrimination with haptics

|

| ||||||

|

Mark's invited talk at the Conference on Implantable Auditory Prostheses

|

|

|

Mark's invited talk at the Oticon Shaping the Future study day, London

|

|

Mark's electro-haptics guest lecture at the Nottingham Biomedical Research Centre

|

2020

|

Mark talks about improving sound localisation in cochlear implant users with haptics at the British Cochlear Implant Group meeting

|

|

Mark's electro-haptics guest lecture for the "Current Developments in Bioengineering" 3rd year BEng module at Nottingham Trent University School of Engineering and Technology

|

2019

|

Our talk at the National Cochlear Implant Users’ Association AGM

|

|

Electro-haptics visits University College London

|

2018

Media and outreach

|

Mark interviewed by the New York Times for an article on emerging haptic technology (2023)

|

|

Mark interviewed by the ABC (Australian Broadcasting Corporation) on Sunday Extra (2023)

|

|

EHS team provide materials and advice to Good Morning Britain on ITV for a piece on cochlear implants (2023)

|

|

Mark was the expert for the ABC news article "Haptic technology creates new ways to experience music for people who are Deaf or hard of hearing" by Sam Nichols and Sam Carmody (2023)

|

|

Mark has contributed an article to A World of Sound, a free science eBook for children (2023)

|

|

EHS featured in the newsletter of the Nation Cochlear Implant Users Association (2022)

|

|

"Listen with your wrists" is our new article published in Frontiers for Young Minds (2021)

|

|

Live webinar for school children on our research (2021)

|

|

Interview with Mark in The Hearing Journal (2021)

|

|

The EHS team support the Robosapiens permanent exhibition by the Science Museum of Minnesota

|

|

The EHS project featured in the British Society of Audiology magazine Audacity

|

|

Our latest work featured in The Conversation

|

|

Mark talks about neuroscience and the Electro-Haptics Project to secondary-school students for the Smallpiece Trust

|

|

Research workshop at the University of Cambridge

|

|

EHS featured in Hoorzaken

|

|

Some of the professional bodies that have shared our work:

|

Funding

|

£13k funding from the Web Science Institute for a project titled: "A web-based software training tool to improve music enjoyment for cochlear implant users" (2023-present)

|

|

£7k equipment support fund from Oticon Medical (2022)

|

|

£5k app development fund from Audika (2022)

|

|

£5k award from the Signal Processing, Audio and Hearing Research Group at the Institute of Sound and Vibration Research to explore the use of haptics to aid people with balance disorders (2022)

|

|

£450k award by the William Demant Foundation to explore electro-haptic enhancement of music perception (2021-present)

|

|

£40k invested into the Electro-haptics project by the University of Southampton Auditory Implant Service and Faculty of Engineering and Physical Sciences (2019-2022)

|

|

EPSRC Doctoral Prize awarded to Sam Perry, allowing him to join EHS as a Research Fellow (2021-2022)

|

|

£1k equipment fund to seed a collaboration between the University of Southampton and the University of Iowa awarded by the Iowa Neuroscience Institute (2020)

|

|

£500k grant to support Electro-Haptics Research (2019-present)

|

|

|

£155k grant awarded to support Ahmed Bin Afif, our new PhD student (2019-present)

|

|

£80k grant to support our Virtual Acoustics project (2019-present)

|

|

£10k to fund an internship for 6 months on the electro-haptics project (2019)

|

The team

|

Lab leader

University of Southampton |

Ama Hadeedi

EHS Speech Enhancement

University of Southampton |

Technical lead

University of Southampton |

Clinical Audiology

UoS Auditory Implant Service |

|

Robyn Cunningham

EHS Spatial Hearing

University of Southampton |

Ahmed Bin Afif

EHS Speech Enhancement

University of Southampton |

Noise reduction

University of Cambridge |

Nour Thini

EHS Music Enhancement

University of Southampton |

|

Tactile Neuroscience

University of Southampton |

Søren Riis

Chief Research Officer

Oticon Medical |

Algorithm development

University of Southampton |

Bjørn Petersen

CI Neuroimaging

MIB (Uni. Aarhus) |

|

Haoheng Song

EHS Spatial Hearing

University of Southampton |

Haptic Sound-localisation

University of Southampton |

Electronics

Imperial College London |

Music in CI users

DTU |

|

Research and Technology

Oticon Medical |

CI Neuroimaging

MIB (Uni. Aarhus) |

Mechanical Engineering

University of Iceland |

Lecturer in Audiology

University of Southampton |

|

EHS for Music

DTU |

CI Music Perception

MIB (Uni. Aarhus) |

Neurophysiology

University of Iowa |

Neurophysiology

University of Iowa |

|

Audio-tactile modelling

University of Exeter |

Audio-tactile modelling

University of Exeter |